Summary

Only a couple of months after the Apple Card’s August 2019 release, the New York State Department of Financial Services launched an investigation into the credit limit algorithm used by Apple’s banking partner, Goldman Sachs. This investigation came on the heels of a series of viral tweets from…

The content on this page is accurate as of the posting date; however, some of our partner offers may have expired. Please review our list of best credit cards, or use our CardMatch™ tool to find cards matched to your needs.

Only a couple of months after the Apple Card’s August 2019 release, the New York State Department of Financial Services launched an investigation into the credit limit algorithm used by Apple’s banking partner, Goldman Sachs. This investigation came on the heels of a series of viral tweets from prominent men in the tech industry claiming that their wives had received significantly lower limits, despite including similar information in their applications.

“Goldman Sachs indicated … that some of the high-profile women who received lower credit limits had most of their existing credit via authorized user cards,” says Ted Rossman, industry analyst at CreditCards.com. This doesn’t “carry as much weight as being the primary account holder.”

Being an authorized user on a card is not the same as being a joint account holder. Unlike being a joint account holder (which is rare for a personal credit card), authorized users have access to a card’s credit but aren’t responsible for paying the balance. A 2010 Federal Reserve report indicated that married women were twice as likely as married men to have authorized user accounts make up the bulk of their credit history.

In response to online criticism, Goldman Sachs has announced that they will now allow account holders to add authorized users to their cards. While this might quell criticism against the bank, it does not solve the alleged problem that women are receiving lower credit limits and fails to address any of the additional factors that could have created such a discrepancy.

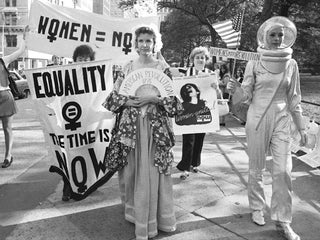

An industry history of sexism

While this news about the Apple Card has started a conversation around potential bias in the credit industry, these issues aren’t new. Fifty years ago, it was commonplace for women to receive lower credit limits than men. The Smithsonian found that women’s wages could be “discounted … by as much as 50 percent” when lenders calculated limits. And that was only after women were able to cross the incredibly high threshold of getting approved in the first place – often by bringing a man to co-sign.

This changed in 1974 when the Equal Credit Opportunity Act (ECOA) was introduced, barring lenders from discriminating against people based on race, color, religion, national origin, sex, marital status, age or whether the applicant receives public assistance.

Though the ECOA requires lenders to approve applicants and decide terms without asking any questions about those protected groups, it has not completely eliminated credit discrepancies for women:

- In 2012, women’s credit card interest rates were half a point higher than men’s.

- In 2017, a study found that women were more likely to be denied mortgages, and when they were approved, they paid higher interest rates than men.

- Also in 2017, more women than men reported that their credit took a hit after divorce.

- In 2018, the Federal Reserve found that single women generally have lower credit scores than single men.

These studies are far from conclusive. Since credit bureaus and lenders must make decisions without asking any questions about these protected groups, there isn’t enough data to draw significant conclusions. However, the results that are available indicate a troubling trend: women might not actually have equal access to credit and fair terms.

A high credit score doesn’t always equal the best terms

Though FICO – creator of the most widely-used credit scoring model – is somewhat secretive about the specifics that go into credit scoring, we know the general ratio associated with the five top factors that go into its model:

- 35% payment history

- 30% credit utilization

- 15% credit history

- 10% new credit

- 10% credit mix

Your credit score is an important component to getting approved for credit and for the limit you’ll receive, but it’s not the only thing lenders look at. They’ll also consider other factors like your salary and debt-to-income ratio. While this gives lenders a fuller picture of your financial situation, it can put women at a disadvantage.

“Because many women have lower incomes and more debt (especially student debt … ), this could contribute to unintentional [or] ingrained bias,” says Rossman.

With the gender pay gap and a higher debt burden, it can be harder for women to get the best loan terms. And with potentially lower limits and higher costs, women likely use more of their available credit each month than men. Since credit utilization accounts for 30% of your credit score, that can have a significant impact.

Lender algorithms

Lenders often streamline the process for determining an applicant’s creditworthiness by creating an algorithm that can help predict risk. What factors go into the algorithm and how much weight each one has will vary by issuer.

As detailed in the New York Times, J.P. Martin, an executive at Canadian Tire, created an algorithm in 2002 for the store’s credit card. By analyzing what cardholders were purchasing and where they spent their money, he could predict how likely they were to pay their bills. For instance, “people who bought cheap, generic automotive oil were more likely to miss a credit-card payment than someone who got the expensive, name-brand stuff.”

Though Canadian Tire continued to use more traditional methods for determining risk – citing fear that customers would be uncomfortable with that level of surveillance – Martin’s model proved to be much more accurate.

By 2009, though, “most of the major credit-card companies [had] set up systems to comb through cardholders’ data” that could result in cutting credit lines or interest rates, according to the New York Times.

Gender proxies

This level of scrutiny has the potential to discriminate against women and other protected groups by assigning risk to spending patterns or application answers that could be associated with a specific group of people.

Algorithms can “learn patterns from what we call proxies, which aren’t officially gender but may serve to predict gender,” says Briana Vecchione, an information science Ph.D. candidate at Cornell University. “One classic example is occupation. [If an applicant’s occupation has certain features and] is a higher-income occupation, there’s this social bias to assume that the applicant is male instead of female.”

For instance, replace oil brands in the example above with stores or occupations. If Sephora shoppers or people who list the occupation of homemaker on an application were deemed risky, the result would skew against women – even though gender is never specifically mentioned.

“Do we really want those patterns reflected in our lending decisions? I would argue, no,” says Vecchione.

What is the black box?

In the case of Canadian Tire, Morgan created the algorithm by hand and could see and understand how he came to his conclusions. Theoretically, if any conclusions were discriminatory, he’d be able to notice and remove any offending components. But finding those bugs is becoming increasingly difficult as more lenders are outsourcing that process to machines.

In the broader public, there’s this “assumption that the black box is producing outcomes that are fair and just,” says Amy Traub, associate director of policy and research at Demos.

But proving otherwise isn’t easy. Companies might have insight into what type of data is being used but not always how decisions are being made. And regulations aren’t in place to require much oversight.

“It’s an overwhelmingly unregulated space,” says Vecchione. “And lenders want to keep it that way.”

Earlier this year, the House Financial Services Committee held a hearing about holding the credit bureaus accountable, and a bill has been introduced to provide a little more transparency and oversight into the industry. The outcome of these efforts, though, is yet to be seen. And experts in the industry are not in agreement about how best to move forward.

“There has been a decades-long discussion [in the academic sphere about] how … we evaluate these kinds of systems,” says Vecchione. “Who should be evaluating them? Should it be within the company? Should the company hire someone to do this? … There are still a lot of open questions about what it means to create a [fair and unbiased] algorithm.”

What can you do to combat this problem?

This isn’t an exhaustive list of the ways women and minorities could be put at a disadvantage, since the credit industry seems to mirror already-existing societal inequalities.

“Many of these factors that are discriminatory are not just discriminatory against women but may disproportionately affect women,” Vecchione says. “The degree to which these affect women vary by woman. And it emerges from socioeconomic factors, things like resources, privilege and power.”

While these factors make it difficult to ensure that everyone has equal access to credit, there are some steps you can take to minimize the effect.

- Make sure that you have credit in your own name and not just as an authorized user on someone else’s card. If you’re unsure, you can call your bank to find out.

- Pay your credit card bill as often as you can. Though the gender pay gap might put women at a slight disadvantage for credit utilization, the answer doesn’t have to be to use less credit. By paying your bill two or three times a month instead of one, your credit utilization will drop, regardless of how much you spend.

- Check your credit often. Most banks let customers check their credit score for free on their online accounts. If your bank doesn’t offer that, you can sign up for similar services on websites like Mint. Further, you can pull a copy of your credit report for free once each year with each of the three major credit bureaus at AnnualCreditReport.com. Both these tools can help you understand how you look to potential lenders and help you monitor for any suspicious activity.

- Speak up if something is wrong. If you find an error on your credit report or believe your card terms aren’t fair, say something. According to the FTC, 20% of American consumers see errors on their credit report. Combined with the potential bias in the industry as a whole, errors will only make things worse. You can dispute errors directly with the credit bureaus.

Until there are more policies in place to add oversight into this shadowy industry, it’s unfortunately up to us to make sure we’re treated fairly. But if the Apple Card news last month showed us anything, it’s that if we band together, we have a better chance of making sure that we all have equal access to credit.

If you have any stories about bias in the credit industry, we’d love to hear them. You can send us an email at tohercredit@creditcards.com.

Editorial Disclaimer

The editorial content on this page is based solely on the objective assessment of our writers and is not driven by advertising dollars. It has not been provided or commissioned by the credit card issuers. However, we may receive compensation when you click on links to products from our partners.